Ape-tizers:

- REI is a crypto-native research organization focused on reimagining artificial intelligence from first principles.

- The Core framework separates language processing, memory, and cognition into distinct systems for improved efficiency and scalability.

- By minimizing reliance on monolithic LLMs, REI has managed to reduce hallucinations by well over 70%.

We’ve all been there: discussing a hot topic over drinks or dinner, only to hit that one argument that’s just nuanced enough to bring everything to a halt because, let’s face it, neither side actually knows what they’re talking about.

Then, we realize that we live in the 21st century. We pull out the trusty ChatGPT app on our phones and let the machine think for us. Personally, even if I use Grok or Perplexity, I still tell my normie friends I “asked ChatGPT ”because to them, it’s all the same.

But not for us, the Crypto x AI enthusiasts. We understand the differences: parameters, training data, and model design all produce different results. Some LLMs excel at text generation, others at reasoning or coding.

Despite today's LLMs showcasing impressive capabilities, real-world testing reveals significant failure patterns. Issues include generating fabricated financial metrics, spreading medical misinformation, and demonstrating a lack of cumulative learning, as they approach repeated problems as new challenges.

The plateau no one saw coming

Over the past few years, we’ve had the pleasure of witnessing the most rapid advancement and proliferation of technology in our species' history.

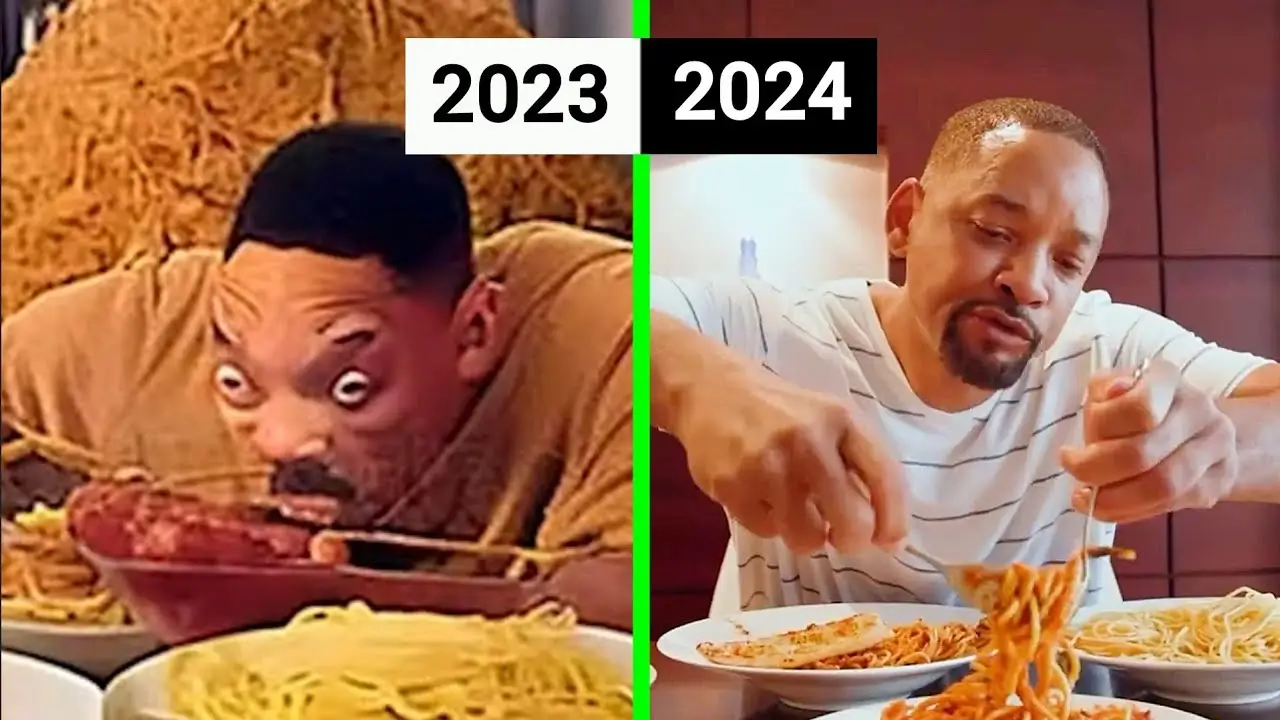

An example that paints the clearest picture (literally) of just how far we’ve come is the famous footage of Will Smith eating spaghetti.

Looking back, it was terrible. But at the time, we just watched in amazement as a computer system created a video of a human eating from a bowl. Fast forward to 2025, we now see photo-realistic videos that are almost indistinguishable from real life.

Essentially, the entire world, including myself, believes that AGI will arrive tomorrow, simply because the technology is advancing so quickly.

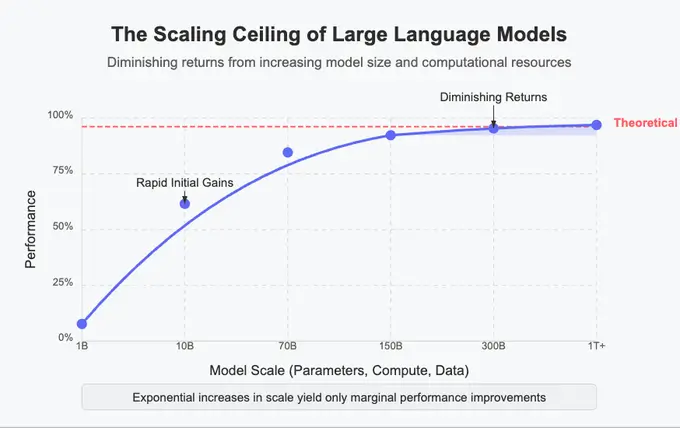

For years, the formula seemed simple: throw more data and compute at the problem, crank up the parameter count, and voilà, a better LLM. And for a while, that worked.

But recently, the gains from this brute-force approach have started to level off. We’re hitting a point of diminishing returns, especially in reasoning ability. More parameters now mean more cost without proportional improvements in performance.

So here’s the question: If more compute and bigger models aren't enough, what's next?

This isn't something you can fix with more data or GPUs. The bottleneck is fundamental: it lies in how LLMs process and generate answers. Despite all the hype, LLMs aren’t actually “thinking.” They’re guessing the next word based on probability. That’s powerful, but it has limits.

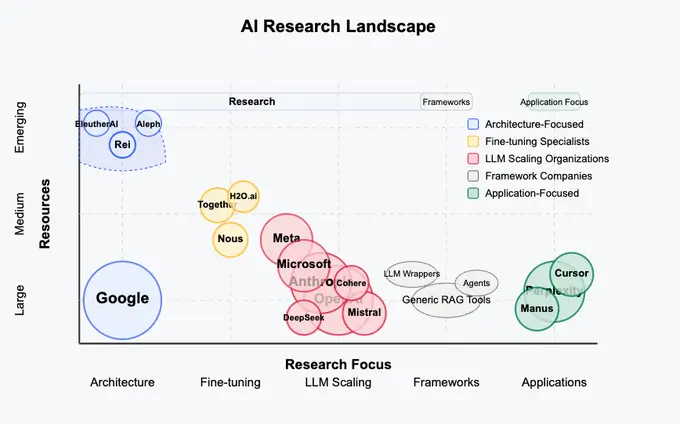

REI, a crypto-native AI research company, believes the answer lies in how models operate, not how big they are.

The DeepSeek wake-up call

We’ve already seen glimmers of this shift. DeepSeek’s Model of Experts (MoE) approach showed that with smart architecture, you can match or outperform mega-models, without mega-costs.

Their R1 model delivered top-tier results while keeping training costs at a measly 5.58 million to train. That’s over 89 times cheaper than OpenAI’s rumored 500 million budget for its o1 model.

When DeepSeek demonstrated this, it challenged the existing AI landscape. Silicon Valley and Wall Street took notice. Stock prices declined. New questions arose: Are the American giants truly so advanced? Is size their only advantage?

The takeaway? Compute efficiency and task specialization matter more than raw size.

REI takes this a step further and believes that LLMs should only be used where they excel, i.e., handling text queries.

After all, at their core, LLMs are just word-predicting machines.

What is REI?

REI is a crypto-native research organization focused on reimagining artificial intelligence from first principles. Rather than competing in the scale race by throwing more parameters and GPUs at increasingly opaque models, REI is interested in understanding intelligence itself.

Their approach blends insights from neuroscience, cognitive science, distributed systems, and cryptographic infrastructure to develop AI systems that are not only more efficient but also more aligned with human cognition.

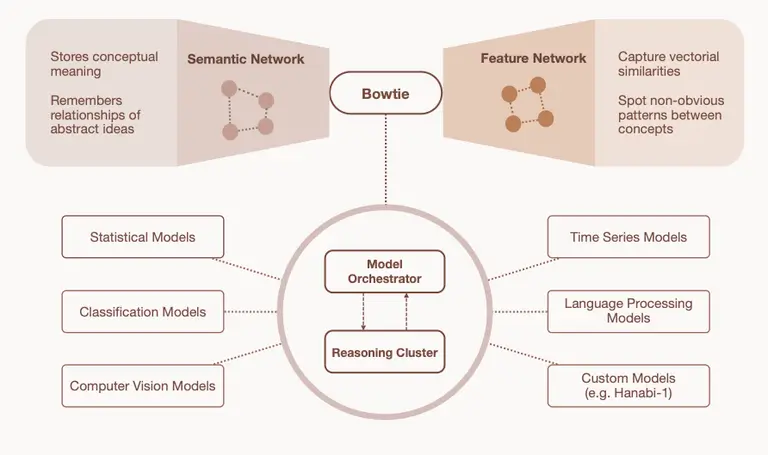

At the heart of REI’s work is the Core framework: a modular architecture that breaks down complex queries into manageable, interrelated parts. Core separates language processing, reasoning, and memory into dedicated subsystems that work in harmony.

This design mirrors the way the human brain compartmentalizes different types of thinking: short-term reasoning, long-term memory, and language processing, into distinct but interconnected functions.

Core: A framework for real AGI

Today’s LLMs are great at narrow tasks but struggle with broad, layered problems that require planning, memory, or adaptive learning.

REI’s Core framework radically redefines the role of LLMs. Instead of making them responsible for everything, REI restricts LLMs to being text-processing engines, nothing more.

From there, inputs are handed off to specialized language models, which interface with a “Reasoning Cluster” and the Bowtie system, which I’ll attempt to explain in a second.

Although there is more nuance, the key innovations that set REI apart from the incumbents are as follows.

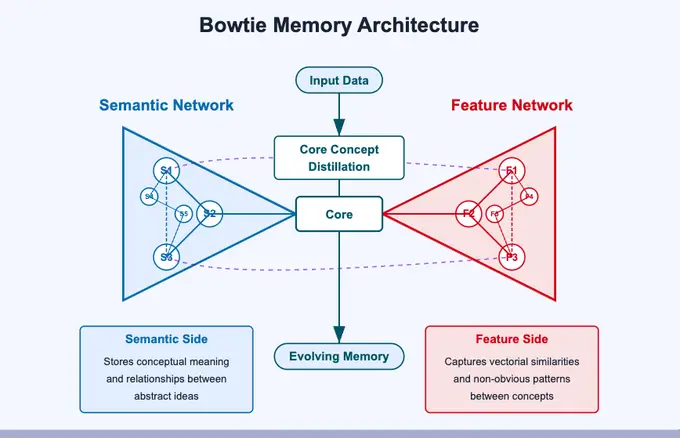

Bowtie architecture: Memory management and concept formation

If you’ve been even remotely paying attention to the AI landscape, you might’ve noticed how ChatGPT seemingly now remembers your past conversations, and perhaps you might even think it ‘learns’ from previous conversations.

In reality, the ‘knowledge’ from previous interactions remains separate from the model's true understanding, and perceived learning or adaptation is just an illusion from prompt engineering. These changes are neither persistent nor evolving.

That is because these models are not learning in real-time. They are simply operating on the knowledge they accumulated during their training run.

REI’s Training at Inference Time changes that. With every prompt and every piece of feedback, the Core system learns, permanently. Memory isn’t just stored. It’s actively integrated and structured.

Together with the Bowtie system, Core captures both explicit knowledge and latent patterns across interactions using dual encoding: One channel stores symbolic representations (semantic relationships), while the other captures abstract, high-dimensional vectors for deeper context awareness.

While I don’t expect you or me to fully understand what that means, all you need to know is that this allows the model to improve its understanding of specific contexts, like your particular job workflow or the concepts involved.

With each new input, the system refines concepts, connects ideas, and builds a richer internal model of the world. This enables true learning, where the model improves not just its outputs, but its understanding.

The Reasoning Cluster: A synthetic brain for cognitive processes

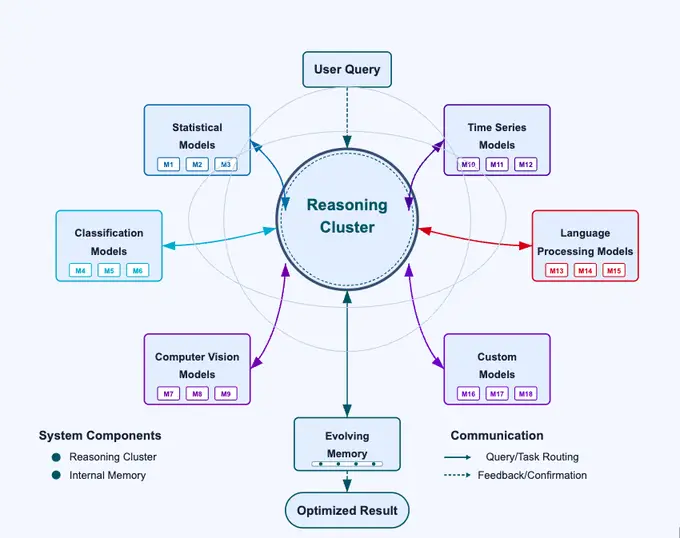

While most LLMs attempt to handle everything in one giant model, REI distributes cognitive tasks across a dedicated Reasoning Cluster. This subsystem acts as the brain of the architecture, handling logical deduction, causal inference, planning, and multi-step reasoning.

The Reasoning Cluster consists of interconnected models, each specialized in a different aspect of cognition, which collaborate dynamically, leveraging input from memory and external tools, then synthesize structured outputs.

The result is a system capable of introspection, feedback loops, and high-order thinking that static LLMs simply can’t replicate.

A notable secondary benefit of this approach is that, unlike black-box LLMs, it can outline reasoning paths for its decisions. It reveals which models influenced an outcome and explains how conclusions were reached, offering clearer insights into how the system arrived at a specific answer.

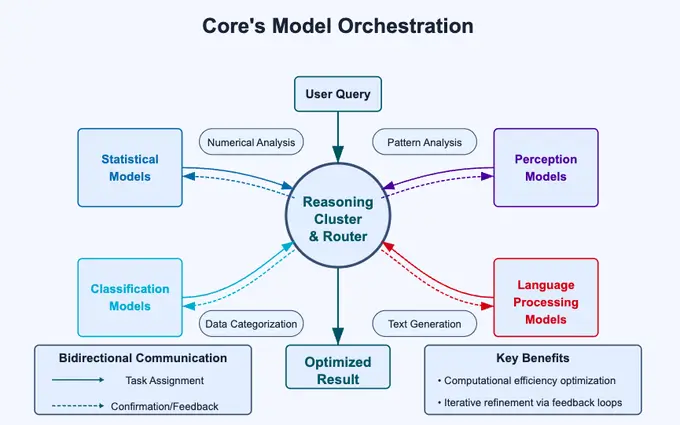

Model Orchestration: Intelligent task distribution

In REI’s framework, no single model has to do it all. Instead, Core functions as a conductor, intelligently assigning tasks to the most relevant expert models in the ecosystem. Whether it's summarizing, coding, logical reasoning, or retrieval, each request is broken down and routed to the optimal subsystem.

This orchestration dramatically improves both speed and quality. By aligning the right task with the right model, Core reduces redundancy and eliminates the inefficiencies of bloated, all-purpose architectures.

Now that I’ve explained why REI is such a fascinating venture, the inevitable question arises: How can I get involved with the next OpenAI?

Fortunately for us, this is not some private company headquartered in Silicon Valley. It’s an independent research team that understands the power of Internet Capital Markets.

Tokenomics

As eloquently stated by the fellow who wrote the below thread,

‘’Rei’s token is currently the only liquid public market exposure to an AI lab directly, without the dilution of a broader tech stack, which in turn draws attention and capital.”

He goes further to say,

“Think about it: if you wanted a pure AI bet today, your options are limited. OpenAI, Anthropic, and DeepMind are all locked inside private valuations or diluted inside larger tech conglomerates. REI, on the other hand, is immediately investable, liquid, and accessible.’’

And I truly resonate with that. Now, onto the technical bits.

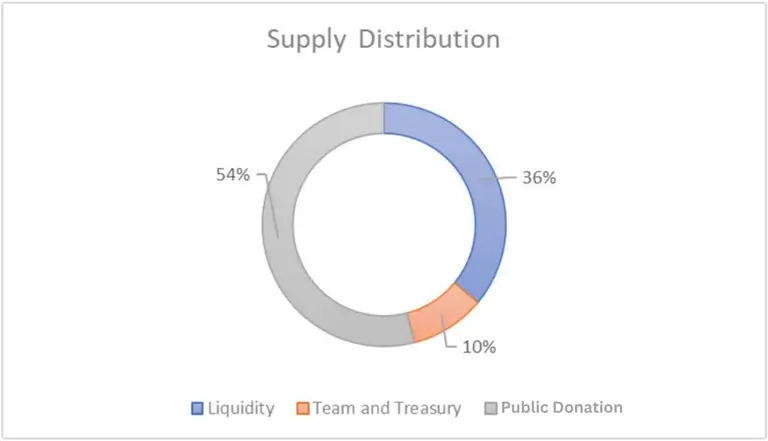

The total supply of REI is 1 billion. The team raised roughly 400k USDC via a token sale that offered 54% of the total supply.

30% of the total supply was allocated to a Uniswap liquidity pool on Base, with 6% set aside for other pools (subject to community vote).

Out of the remaining 10%, half of the tokens are allocated to a multi-sig wallet to support research grants and experimental initiatives.

Meanwhile, the other half is held in a Sablier vesting contract with a 6-month cliff, followed by a linear unlock. Additionally, $140,000 is reserved as runway to cover development expenses and team hiring.

Final thoughts

The way it's laid out, to me, it sounds like REI’s framework pushes us closer to AGI. A system that has memory, learns with each new interaction, and divides tasks intelligently, not just outright predicts words that it has been trained on.

By splitting language, memory, and reasoning into distinct, specialized systems, REI’s Core architecture learns, evolves, and reasons. With persistent memory, real-time concept formation, and a modular reasoning cluster, it moves past prediction into true cognition.

If AGI is about architecture, not scale, then REI may already be the blueprint. And the best part? It’s liquid, open, and onchain, giving anyone and everyone a chance to invest early. And boy, we do love being early here at blocmates.

.webp)

.webp)

.webp)

%20(1).webp)

%202.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)