From its humble beginnings in the 1960s with DARPA's Project MAC—the first attempt at creating a computer that could be accessed by multiple people at once—to the revolutionary developments of Amazon Web Services and Microsoft Azure, cloud computing has transformed how we store, access, and process data.

Over the decades, this technology has evolved from a niche concept into a cornerstone of modern business and daily life.

Cloud computing has empowered organizations to scale operations, innovate faster, and reduce costs by enabling on-demand access to vast computing resources.

Yet, as centralized cloud systems dominate the landscape, concerns about security, privacy, and control have grown louder, especially in the age of AI.

With AI becoming more prominent in our daily lives and decision-making processes, it's essential to create a transparent and fair operational framework for its use.

As advancements in artificial intelligence continue to be driven by increasing computational power, most corporate-developed large language models (LLMs) remain closed-source (black box).

These proprietary systems lack transparency in their internal decision-making processes, raising concerns about accountability and accessibility in AI development.

Fortunately, we have several open-source LLMs like BLOOM, Falcon, LLaMA, and DeepSeek available now. These models offer transparency and flexibility, enabling researchers and developers to access, modify, and deploy them for diverse applications.

However, training and deploying these models require substantial computational resources, posing challenges for scalability. Current cloud providers often struggle to meet the growing demand for efficient processing infrastructure.

To address this issue, io.net—a decentralized GPU network built on Solana —leverages idle GPUs globally from over 130 countries.

This innovative solution provides developers with cost-effective and scalable computing power while reducing reliance on centralized providers.

Problem statement

In our last article, which we encourage you to read to better understand how the product works, we explored how the largest decentralized computing network, IO Cloud, has performed so far and explained the engineering that makes it function.

Essentially, io.net tackles the biggest problem AI systems face today: the lack of accessible and cheap computing power.

But why is it so difficult to access it?

The rapid growth in demand for computing resources has left large data centers and cloud providers struggling to keep pace despite substantial venture capital investments.

While big corporations with favorable contracts often receive priority access, independent research teams, SMEs, and vibe coders face significant challenges.

These smaller entities frequently encounter barriers such as prolonged wait times, high costs, and limited resource access, hindering their ability to innovate and compete. This disparity highlights the need for innovative solutions that provide equitable access to computing power.

Herein lies the advantage of Decentralized Physical Infrastructure Networks (DePIN). At first glance, hardware production might appear to struggle to meet demand, but that's not entirely true. It’s fundamentally an issue of logistics.

Upon closer examination, we find a wealth of unused hardware worldwide, including idle mining rigs, high-performance gaming PCs, and dormant equipment sitting in data centers.

What they require is an orchestration layer that unifies these scattered machines and offers sufficient incentives to motivate their continued participation in the network.

The inherent advantages of DePIN

To grasp the power of io.net, consider the operation of a conventional data center. Even when operating at " full capacity, " these centers often have a few unused cards scattered about.

Now, imagine a neighboring data center running at a similar capacity. Although they are also almost at full capacity, the few idle cards present are insufficient to form a complete cluster deployment, which is often required for AI tasks.

Since these enterprise data centers typically lack coordination to serve a single client, and facilitie lose revenue, the customer is forced to seek other options.

With io.net, both data centers can repurpose their idle hardware for the network, enhancing resource utilization. Since this capacity would not have been utilized efficiently otherwise, it offers cost savings for the end customer.

Naturally, one might wonder whether the decentralized nature of DePINs could result in reduced reliability and uptime. This is a valid concern, but with thoughtful engineering, this issue can be minimized.

io.net tackles reliability and uptime for its clusters through several strategies:

- Reputation score: Each node has a reputation score, which reflects its availability, uptime, and other performance metrics. This score helps buyers make informed decisions when selecting computing clusters and ensures they choose reliable nodes.

- Tiering system: Verified suppliers are tiered by compliance, hardware quality, and staked IO coins to maintain high-quality clusters.

- Staking program: Staking and slashing incentivize reliability and penalize bad actors.

- Distributed architecture: By aggregating compute capacity from diverse sources, io.net provides a decentralized platform that can handle demand spikes more flexibly than traditional centralized cloud providers.

- Support & SLAs*: Offers robust customer support and Service Level Agreements (SLAs) for reliability at lower costs than traditional providers.

*An SLA is simply a formal term for an agreement that outlines the level of service expected from a vendor and lays out metrics for measuring service.

Thanks to these mechanisms, io.net serves a diverse clientele, ranging from large enterprises to indie hackers, offering lower prices, greater customizability, and reliability comparable to industry leaders.

In contrast to the big players, io.net has to navigate the tricky waters of incentives with finesse. Their secret? The IO token is what they refer to as digital oil.

IO tokenomics

You can think of io.net as a large computer that knows no borders, has no prejudice, and is accessible to anyone.

But if you want to be a part of the network, whether by providing hardware or contributing through staking, acquiring IO tokens is essential.

IO at a glance:

- Fixed supply: Capped at 800 million IO.

- Circulating supply: 150 million IO

- Inflation: Emitted to suppliers and stakers over 20 years with decreasing inflation.

- Burn mechanism: Reduces supply via programmatic burns funded by network revenue.

IO serves as the currency for GPU resources and various services on the platform. To enhance user experience, IO Cloud provides GPU renters with the option to pay using USDC or credit/debit cards, although this incurs an additional 2% fee aimed at encouraging the acquisition of IO.

No matter the payment method, these funds are transformed into the IO token, maintaining consistent buy pressure.

Additionally, io.net employs a programmatic burn system, where the network's revenue is used to purchase and burn IO.

The burn amount is adjusted dynamically based on IO's price, reducing supply and creating deflationary pressure.

The genesis supply of IO was set at 500 million, which will gradually increase to a maximum supply of 800 million over 20 years.

50% of these tokens will be allocated to the GPU suppliers and stakers. From this amount, 15 million IO tokens were designated for the initial airdrop and accounted for 1.875% of the total supply.

The other 50% went to early investors, R&D ecosystem, and the team.

The monthly emission schedule follows a disinflationary curve, meaning that the inflation rate decreases over time.

It starts at 8% annually and gradually reduces each month until it reaches near zero. At the time of writing this post, inflation sits around a modest 7% APR.

Starting from July 2025, various investor allocation buckets will begin to vest, adding just over 15% of the total supply into circulation over 35 months.

Co-staking

In light of the ongoing sell pressure from hardware suppliers offloading their earnings to manage expenses or realize profits, io.net figured out a way to catch two birds with one stone.

.webp)

On one hand, for hardware suppliers to become verified and eligible for block rewards, 200 IO tokens must be staked per GPU. While this may not seem significant for businesses, it ensures providers have skin in the game.

On the other hand, retail investors can only bet on the network's growth by acquiring IO. Until now, IO had no utility, not even governance.

Enter co-staking, a new staking mechanism in which both sides join to further bolster the network and accessibility.

The process is straightforward: GPU suppliers generate co-staking offers in the marketplace to obtain the required IO tokens to gain verified supplier status.

In exchange, IO holders accept the offer and receive a portion of the block rewards allocated to that specific supplier.

Benefits of co-staking:

- For suppliers: It reduces financial risk by lowering staking requirements, enabling easier deployment of high-performance hardware. This allows suppliers to manage their working capital more efficiently and expand their capacity on io.net.

- For token holders: Co-staking offers a simple way to earn block rewards and diversify holdings without needing to own hardware. By participating in co-staking, token holders can support the ecosystem while generating additional income from their IO tokens.

But as we know, there is no free lunch in this world.

Risks of co-staking for token holders:

- Performance penalties: Devices that fail to meet performance standards may face slashing penalties, which could affect staked amounts and rewards for token holders.

- Market volatility: The value of IO tokens and block rewards can fluctuate based on market conditions, impacting the profitability of staking.

- Lock-up periods: Unstaking tokens requires a cooldown period (typically 14–21 days), limiting liquidity during this time.

Road ahead

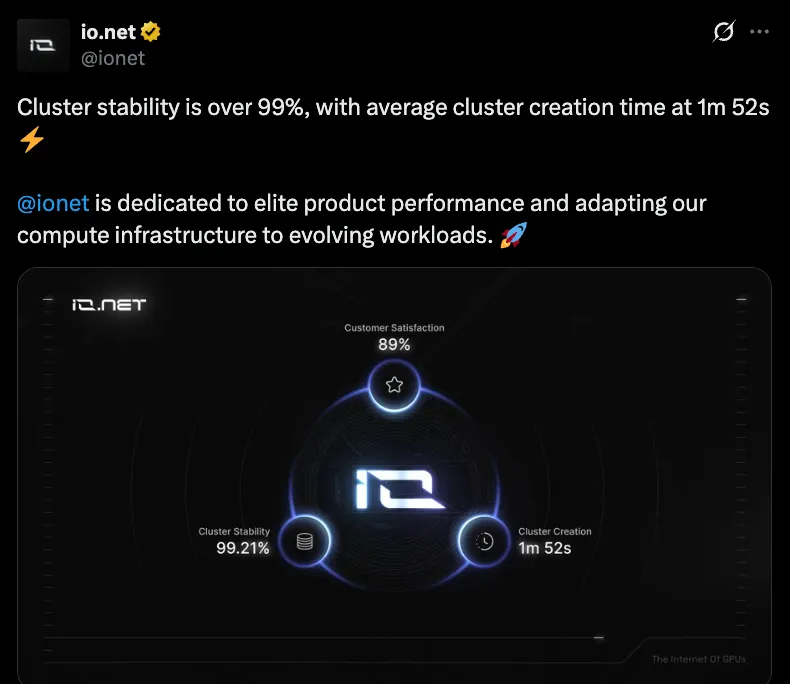

Looking at the near future, the goal for io.net is to continue leading the decentralized compute race by onboarding more suppliers while keeping the cluster stability over 99%.

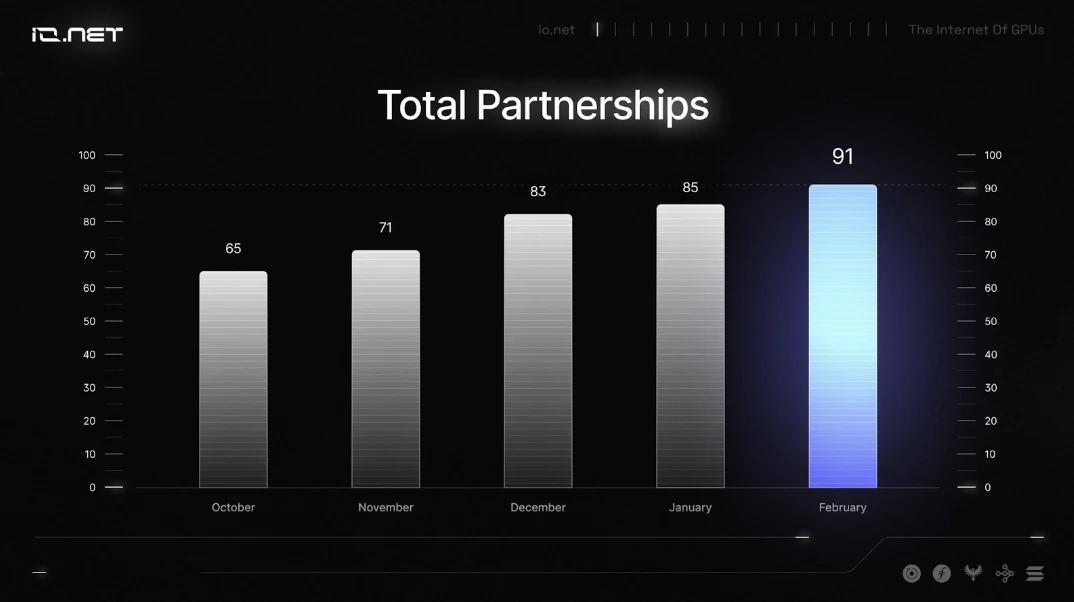

Conversely, business development initiatives will naturally increase demand within the network.

The recent partnerships are numerous, featuring projects such as Swarm Network, Maiga.ai, FrodoBots, Injective, Alpha Network, and OpenLedger, all leveraging io.net’s decentralized network to enhance their services.

For instance, Injective utilizes io.net’s GPU platform to connect with its iAgent framework, allowing developers to train and implement AI models for blockchain-based DeFi activities.

Maiga.ai, however, leverages io.net’s infrastructure to enable real-time data analysis and predictive analytics. This improves AI-driven trading strategies, providing users with smarter insights.

Expanding our view, io.net seeks to challenge the dominance of major players like Amazon’s AWS and Microsoft’s Azure in the cloud infrastructure market.

These companies' strength lies in their wide range of in-house products and services, positioning them as a comprehensive resource for businesses of any scale.

They offer a complete spectrum of solutions, from standard cloud services like database management and storage to advanced offerings in machine learning and robotics.

If io.net aims to increase its market share, simply renting GPUs won't cut it. The network's foundation is solid; now it's time to unleash its full potential by identifying key use cases and undercutting the competition on price.

But the real game-changer? Creating a blue ocean with entirely new products.

Enter IO Intelligence, io.net's AI infrastructure and API platform. Imagine developers and everyday users accessing a vast library of top-tier AI models in one place.

Think beyond just tapping into io.net's compute network—envision a marketplace where creators can list their AI models and directly profit from their ingenuity.

This isn't just about cheaper computing power, it's about unlocking an entirely new AI economy fueled by io.net's decentralized infrastructure.

This is just one example of the possibilities within a decentralized environment, which could foster a lively grassroots ecosystem and ultimately enhance the demand for raw computing power on io.net.

Conclusion

A significant advantage of DePINs over centralized counterparts is their ability to coordinate a number of geographically dispersed machines under one roof, along with built-in token incentive mechanisms.

Instead of needing to raise an excessive amount of money from investors, io.net can bootstrap the supply (GPUs) with token incentives, hoping this will naturally create demand.

But this comes at a price.

In the early stages, DePIN tokens face challenges as many marginal sellers exist while buyers are scarce. This reality is well understood, leading to the token being regarded as unappealing. However, io.net has the advantage of time.

As the network continues to advance and infiltrate the broader AI and cloud market, io.net could reach a critical juncture where the demand for its services not only offsets emissions but also exceeds them, naturally driving the price of the IO token up and to the right.

Given that cost management poses the greatest challenge for companies utilizing cloud services, io.net’s significantly lower pricing and decentralized nature make it an attractive alternative to the AWSs and Azures of the world.

Thanks to the io.net team for unlocking this article. All of our research and references are based on public information available in documents, etc., and are presented by blocmates for constructive discussion and analysis. To read more about our editorial policy and disclosures at blocmates, head here.

.webp)

.webp)

.webp)

.webp)

%20(1).webp)

%202.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)