Whether it’s chatbots or more sophisticated agents, AI is experiencing the adoption that crypto dreams of. We have undoubtedly gone from 0 to 100 with AI in the last four months.

The rapid pace at which AI seems to not just improve but also garner users is something we’ve never really witnessed with any other significant technological milestone.

I mean, a few weeks ago, pretty much everyone was ghiblifying their photos and trying out other themes with OpenAI’s new image generator, and it was fascinating to watch the trend spread like wildfire.

However, the release of OpenAI’s latest GPT-4o model brought renewed attention to one of the most persistent challenges in AI, reliability.

Criticism quickly spread across the timeline on X, with many users calling out the model’s overly polished, and at times, patronizing output.

It served as a reminder that, despite the impressive strides we’ve made, truly error-free synthetic intelligence remains a tad far.

Two major ailments continue to plague AI; hallucinations and bias, both responsible for the rate at which AI prompt outputs contain errors. In the case of GPT-4o, the latter was the issue, so much so that OpenAI eventually rolled the model back.

If your first thought is why can’t this be solved, especially with how rapid innovation in AI is, you’re asking the right question.

The issue here is that like the scaling trilemma in crypto networks, AI faces its own version, known as the training dilemma.

In trying to reduce hallucinations and improve consistency, developers often increase a model’s feedback precision. But this comes at a cost. It can introduce bias, the model’s tendency to stray from objective truth in favor of skewed or overly cautious outputs, and vice versa.

The reality is that this dilemma has left a lingering challenge for AI. Even when addressed through fine-tuning models aimed at improving reliability, a new issue emerges. These fine-tuned models struggle to incorporate new knowledge, becoming outdated or rigid over time.

While this dilemma may not have an absolute solution, at least not yet, the most viable path toward improved reliability lies in adopting a multi-model consensus architecture.

In this approach, multiple models collaborate, cross-verify, and converge to produce outputs that are more objective, accurate, and consistent.

If you’re a crypto native, you can almost smell the coffee brewing here. A centralized approach to improving reliability doesn’t necessarily bring us closer to objective truth.

Why?

Because when multiple models operate under centralized control, the output ultimately reflects the biases and choices of the curator, rather than a true consensus.

The bottom line is that for a multi-model architecture to be truly reliable, a decentralized approach to consensus is essential. The path to more trustworthy AI rests on a verification system that doesn’t rely on any single authority or curator, but instead distributes trust across the network.

In light of the foregoing, our spotlight rests on Mira Network. Mira’s approach to AI verification and output reliability is as intriguing as it is timely. And in the next few paragraphs, we’ll explore exactly how it works.

What is Mira Network?

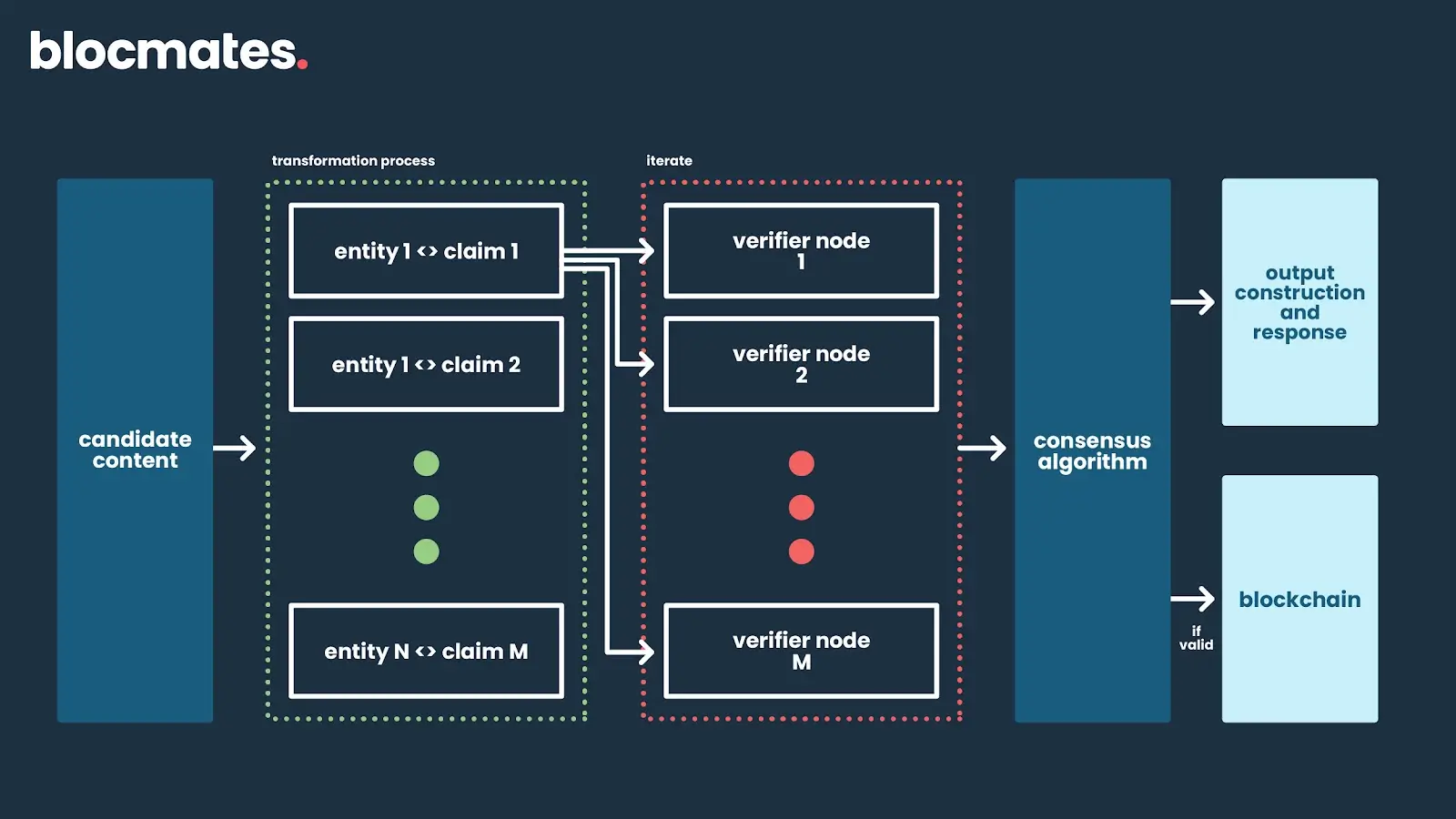

The Mira Network functions as an on-chain, decentralized protocol enabling trustless verification of AI output through distributed verifiers (nodes) of diverse AI models that are economically incentivized to provide honest verification, ensuring objective, consistent, and reliable output.

Through Mira’s architecture, both simple contents like statements and easy problems, and more complex ones like code, videos, etc, are verified in a decentralized and reliable manner.

What this simply entails is that Mira’s network uses the multi-model verification path to reduce errors (hallucinations and bias) in AI outputs by combining a distributed verifier node network, a consensus mechanism, and candidate content transformation which often involves breaking down the content to independently verifiable claims.

Working mechanics

To understand how each aspect of the Mira Network infrastructure works, let’s use an example that cosplays the process.

For example, if a user’s candidate content is, “ Arsenal is a club in London that has won three (3) UEFA Champions League titles.” The transformation process begins by breaking the content down into claims. This step is known as Binarization.

- Arsenal is a club in London

- Arsenal has won three (3) UEFA Champions League titles

Next, the network distributes these claims to node verifier models in the network to verify each claim. This step is called distributed verification, and as a matter of security and privacy, no verifying unit is capable of seeing the complete content.

Lastly, after the claims have been verified by the specialized models, a hybrid consensus mechanism that combines both proof-of-stake (PoS) and proof-of-work (PoW) together known as proof-of-verification begins.

In this phase, a cryptoeconomic mechanism is at play: Verifiers are incentivized to perform inference, rather than just attestation on the claims.

Lastly, the result of the verification is sent as consensus in the form of a cryptographic certificate, accompanying the outcome to the owner.

Like we mentioned earlier, Mira also takes privacy seriously, ensuring that content or inputs stay private by breaking them into entity-claim pairs and sharing the broken claims randomly across the network participants (nodes).

This sharding process allows for discrete transformation and verification of candidate contents, whereby responses after verification remain private until consensus.

Mira’s application of secure computation techniques to ensure privacy also reflects post-consensus. The certificate issued exists in cryptographic form, carrying only the unique verification details executed per node.

Mira’s economic incentive model/nodes

Mira employs a hybrid cryptoeconomic model that secures the network while creating an incentive flywheel. This hybrid model combines PoW and PoS mechanisms in a unique way that assures network stability and growth.

So, how does it work? Mira’s approach to PoW diverges from traditional consensus models. Instead of solving complex mathematical puzzles with high-powered machines, Mira standardizes the verification process by transforming it into a system of structured, multiple-choice questions.

This process, however, creates a fresh problem where verifiers can simply guess their way into a truck load of incentives, with the probability of guessing correctly increasing with lesser options to choose from in a question.

That’s where PoS comes in. To participate in the process of verification, nodes have to stake a valuable amount, which will be prone to slashing should they randomly guess outputs rather than infer.

With penalties put in place, node operators are sentenced to act in good faith, performing verifications in an honest manner. This further secures the network as the majority of staked tokens remain in the hands of good actors, acting as a defense against malicious nodes.

Mira’s economic model is designed to encourage broad participation, allowing new entrants to join the network and earn incentives.

The result? Greater decentralization, and with it, increased reliability, as a wider range of verifier models contribute to a more diverse and expansive knowledge base.

It’s important to note that Mira Network is taking a strategic approach to distributed verification. The transition will be gradual, starting with carefully selected or whitelisted node operators, then evolving toward a more decentralized model.

In this setup, multiple instances of the same model may process each verification request. This redundancy acts as a built-in audit layer, helping to identify lazy or underperforming operators.

At the same time, the system is designed so that the potential window for malicious behavior is narrow, while honest participation is rewarded through well-aligned incentives.

Writer’s thoughts

As we enter the golden age of AI, the idea of a sycophantic, error-prone model should be enough to send chills down your spine. No matter how you frame it, unreliable AI introduces serious risks.

Now more than ever, users need to trust the outputs generated from their prompts, whether it’s for decisions, insights, or creative work.

That’s exactly why Mira’s architecture matters. It isn’t just an idea, it’s a proven framework for verifiable, decentralized AI you can actually depend on.

And with the launch of Klok, an AI chat app built on Mira’s decentralized, trustless verification network, Mira has demonstrated that its model is not just visionary, but ready for real-world deployment.

Klok leverages Mira’s multi-model infrastructure, where top-tier LLMs like GPT-4o, DeepSeek, and Llama 3.3, act as trustless verification nodes. These models collaboratively verify prompts, reinforcing the integrity and reliability of each interaction.

At the moment, Mira’s public testnet is live and you can check it out.

Beyond being a decentralized framework for trustworthy AI, Mira carries the spirit of a public good. After all, any serious effort to ensure safe and reliable AI isn’t just a technical milestone, it’s a service to humanity.

Note: A handful of the blocmates team are deep in the Mira trenches, so factor that bias in. All of our research and references are based on public information available in documents, etc., and are presented by blocmates for constructive discussion and analysis. To read more about our editorial policy and disclosures at blocmates, head here.

.webp)

.webp)

.webp)

.webp)

%20(1).webp)

%202.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)