Since the arrival of ChatGPT, large language models have transitioned from being mere "predictive parrots" to capable logical thinkers.

Modern models address complex issues effectively through advanced reasoning, employing chain-of-thought techniques, interpreting file contents, and leveraging sophisticated architectures such as DeepSeek’s Mixture of Experts (MoE).

Here’s the catch: language models can talk a big game, but without integrations and the ability to take actions, they’re just fancy sinks with no plumbing.

They can write your emails, plan your day, even tell you to book a flight, but they can’t actually do any of it.

In contrast, AI agents are the “pipes” that link your fancy sink to the rest of your house’s plumbing, translating conversation into action and delivering tangible automation results.

Nonetheless, this introduces fresh challenges, including insufficient collaboration protocols, scalability problems in distributed systems, and issues related to transparency and trust.

So, how do we address these issues?

One project we're tracking, OpenServ, aims to accomplish this through the development of an AI orchestration layer.

Let's explore how.

What is OpenServ?

OpenServ is a platform for builders and developers to create, deploy, and orchestrate autonomous AI agents at scale.

Its chain-agnostic framework enables production-grade agentic apps to thrive across both web2 and web3, featuring advanced memory, cognition, multi-agent orchestration, and a robust SDK.

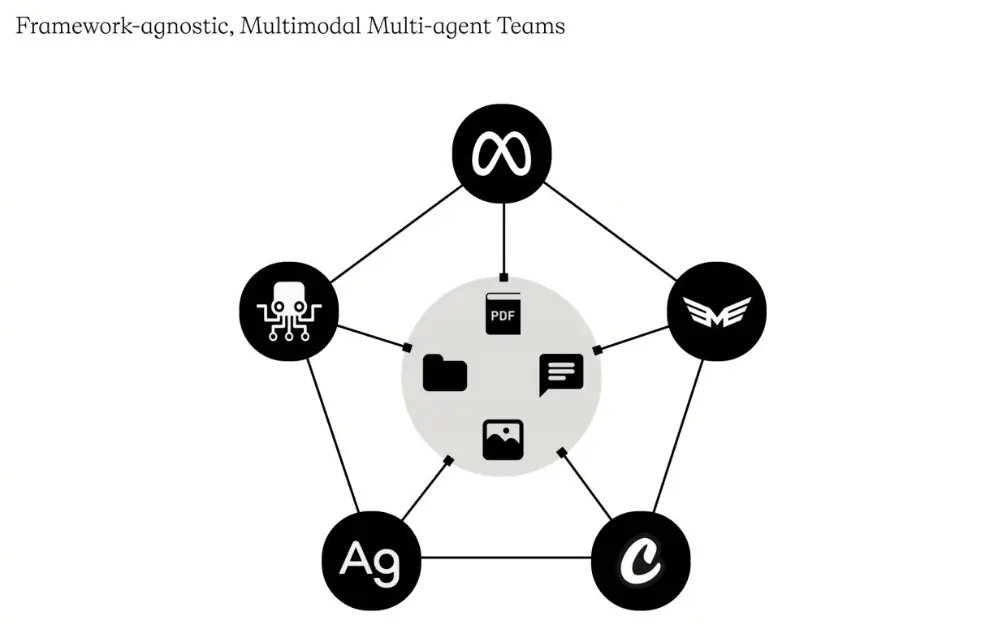

You see, open-source agent frameworks, most notably ElizaOS and arc’s Rig, allow developers to build custom on-chain agents, each with its own pros and cons.

Alas, the age-old problems of interoperability and fragmentation haunt us again. For example, ElizaOS agents are built using TypeScript, whereas Arc’s Rig is based on Rust.

Although both allow for the development of on-chain agents, they provide unique methods for connecting with services beyond the crypto space.

Rig connects non-crypto services via modular crates, whereas ElizaOS employs a plugin-based architecture.

If I explored all the differences between them, I could likely talk for days. By now, I hope I’ve conveyed my point: why pick one when you can enjoy both?

The fragmentation of agent frameworks across languages, protocols, and environments is accelerating.

Rather than forcing developers to choose, rebuild, or maintain integrations, OpenServ functions as the connective layer that facilitates seamless interoperability between frameworks.

It provides maximum flexibility to build and deploy autonomous agents from diverse environments, abstracting away complexity so builders can focus on logic, workflows, and outcomes—not plumbing.

In degen terms, agent frameworks are the new L1s, and OpenServ serves as the bridge between them.

But this time, the bridge facilitates not only the movement of assets but also that of data and instructions that are enacted in the real world.

Tools like TypeScript, JavaScript, and Python SDKs provide developers with robust programming environments and frameworks for building intelligent agents.

Complementing these, REST APIs enable seamless communication between disparate software systems, ensuring interoperability across platforms.

To safeguard sensitive data, the Secret Management tool offers secure credential handling, while the Memory API improves how agents store and retrieve information, allowing for more contextual and persistent interactions.

OpenServ’s unique Shadow Agent architecture streamlines development by functioning as the quality assurance team, allowing developers to focus on higher-level tasks without the concern of low-level integrations or error handling.

And to top it all off, the recent addition of MCP support empowers agents to connect with thousands of external services.

Together, these components form a powerful toolkit that dramatically enhances productivity, adaptability, and workflow efficiency, and equips developers to design custom agents and workflows that go far beyond simple conversational exchanges with LLMs.

OpenServ provides no-code tools for quick prototyping and onboarding of less technical users, but its true strength is in the robust developer layer that supports it.

The platform allows builders to directly utilize agent cognition, facilitating real-time orchestration and integrations for both straightforward and highly intricate use cases.

OpenServ simplifies the prototyping and deployment of agentic apps by providing comprehensive documentation and a user-friendly SDK. This enables developers to quickly start building production-ready agentic applications without the usual overhead involved.

Additionally, a node-based UI is planned, which will further simplify the design, visualization, and iteration of multi-agent workflows for builders.

The most exciting aspect? The technology behind all this is truly impressive.

Tech behind OpenServ

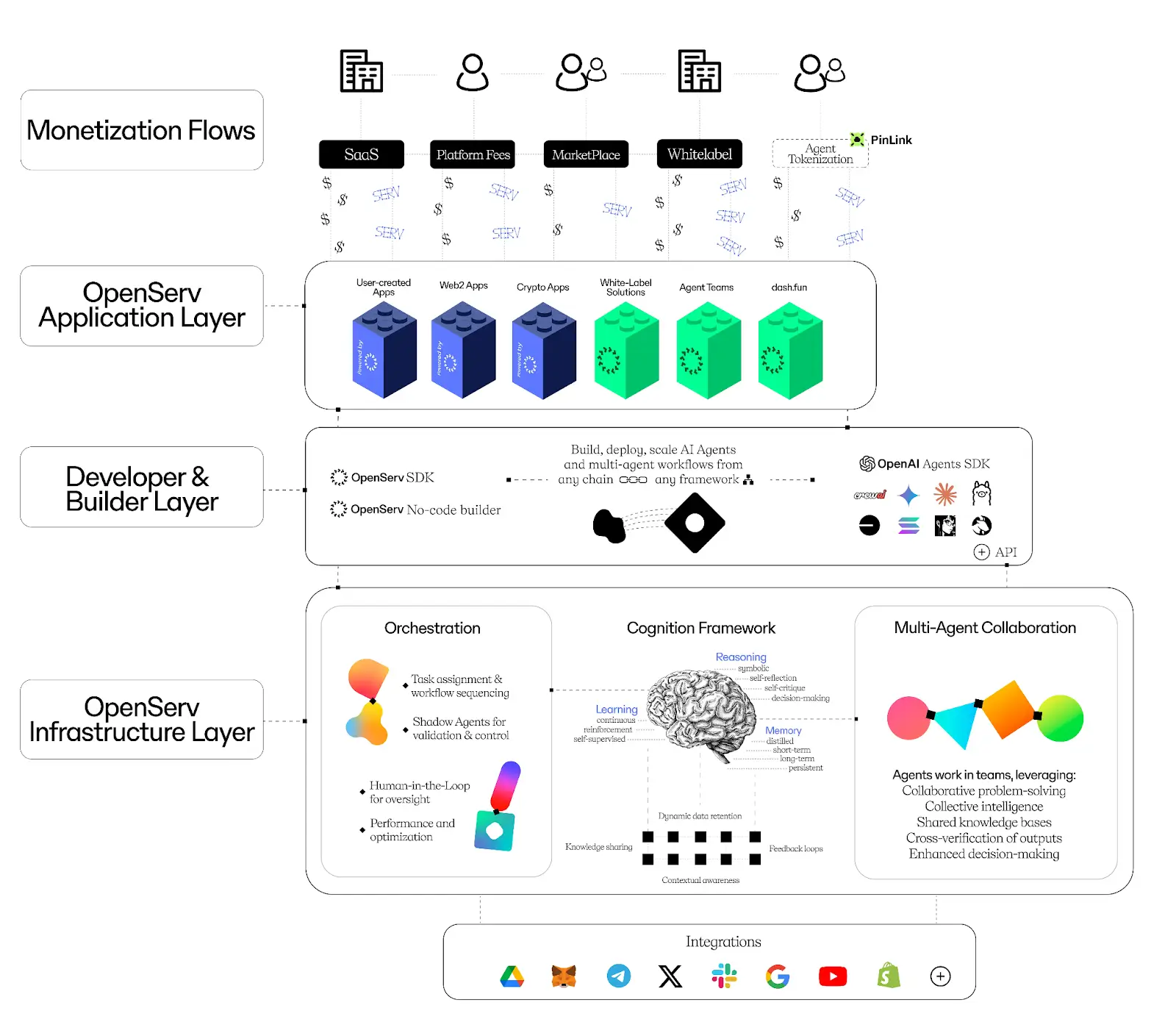

At a high level, OpenServ consists of three distinct layers, with the infrastructure layer powering everything built on top:

1. Infrastructure layer

2. Developer & builder layer

3. Application layer

The infrastructure layer serves as the foundation of OpenServ. It encompasses all the innovative engineering techniques that drive the no-code builder and support developers in utilizing the different toolkits.

OpenServ’s agents operate through various components, primarily driven by the cognition framework, collaboration protocol, and integration engine.

The cognition framework acts as the brain, providing agents with autonomous decision-making, advanced reasoning, and persistent memory capabilities, reducing the need for human intervention.

It enables agents to retain context across interactions, adapt to evolving tasks, and focus on relevant information through dynamic memory filtering.

Combined with human-like logic and built-in feedback loops, agents can handle complex instructions, learn from outcomes, and improve over time.

The Virtual File System is a pivotal mechanism that grants these agents memory and the ability to collaborate. It handles all the data, files, and context that agents can collectively access.

Thanks to this built-in memory, agents can create, organize, and retrieve a broad range of files, including documents, spreadsheets, images, videos, and more.

Now that the agents are clearly intelligent beyond comprehension and possess a shared memory, OpenServ had to enable their collaboration.

The multi-agent collaboration protocol, a framework-agnostic approach, accomplishes this and mitigates the common drawbacks of single-agent setups by ensuring seamless communication across tasks and projects.

Aligned with a typical human workflow, the agents follow a shared task orchestration framework, where tasks move through stages such as "to do," "in progress," "under review," and "done."

In true human fashion, there is even an AI agent that acts as a manager, overseeing whether its subordinates complete tasks correctly and on time.

Furthermore, each agent on OpenServ is accompanied by two Shadow agents. And no, they’re not a pair of wicked hedgehogs.

Shadow agents are invisible, symbiotic assistants that run alongside every agent on our platform. For every active agent, there are two Shadow agents working behind the scenes to ensure smooth operation.

The first one is a collaboration expert. This agent understands the platform’s APIs and manages complex tasks such as file creation, integrations, and interpreting human assistance requests.

For example, when you tell an agent to “create a file” or “assign a task,” the first Shadow agent executes the request seamlessly without additional programming effort.

The second Shadow agent, or output validator, ensures the output meets the expectations set in the system prompt and assigned task.

For example, if an agent is supposed to write haikus but produces a generic poem, the second Shadow agent flags the mismatch and prompts a redo until it aligns with the original intent.

With each agent linked through API, OpenServ created an advanced mapping system called the integration engine.

This system automatically adapts to API changes, simplifies development, speeds up the time-to-market for AI solutions, and expands the variety of tasks agents can handle.

Having explored how the infrastructure layer oversees the essential functionality and logic of the agents, we can now leverage the extensive capabilities offered by OpenServ through the developer and builder layer.

This layer contains the no-code builder and various developer tools, such as the OpenServ SDK, APIs, OpenAI Agent SDK, and MCP.

Since MCP has been all the rage recently (rightfully so), this integration is particularly powerful, enabling users to connect to thousands of external services seamlessly.

This finally brings us to the application layer, where the culmination of all the aforementioned technologies manifests in user-facing applications.

OpenServ equips builders with the tools needed to create AI-powered applications featuring memory, reasoning, and blockchain interoperability without the need for complicated coding.

The first live demonstration of these capabilities is dash.fun, which we’ll unpack shortly.

But first, let’s discuss the token.

$SERV tokenomics

SERVing (pun intended, sorry) as the cornerstone of economic activity, OpenServ monetizes its agent infrastructure through various channels that ultimately return value to the SERV token.

The principle is simple: teams pre-purchase credit packs, and every agent call, feature use, or integration across all apps automatically consumes credits.

As user bases expand, so does agent workload and credit expenditure, i.e., scaling seamlessly with demand. All app tasks run through a single layer (OpenServ) tying the entire ecosystem to its economic engine.

The result is a powerful flywheel: more apps lead to more credit consumption and revenue, with 25% of gross revenue automatically used to buy back and burn $SERV tokens, reinforcing long-term value.

And speaking of value, OpenServ kicked off with a pre-seed round that offered 1% of the token supply at a $5 million fully diluted valuation (FDV), followed by a seed round selling 15% at a $7.5 million FDV.

In November 2024, 25% of the total token supply was publicly available via Fjord Foundry. Tokens purchased from investors in all funding rounds were immediately accessible at the token generation event (TGE).

With a substantial allocation for liquidity and the treasury, alongside a generous public sale that featured no lockup or vesting, the token's only downside is that its primary contract resides on the Ethereum mainnet.

For such an innovative project, buying it on Ethereum is akin to using a BlackBerry to play Cyberpunk 2077.

Fortunately, they have confirmed that liquidity will also be introduced on Base.

Now that you’re familiar with the protocol and the token, here’s what you can expect in the near future.

Road ahead

One of the most exciting developments slated for Q2 is the launch of dash.fun, the first DeFAI app powered by the OpenServ engine, which will enable users to discover, research, and trade all in one place.

The proof-of-concept is LIVE internally, and you can sign up for the waitlist to get access. The team’s all set to run the demo today, i.e. 20 May, and will open it up for a short trial over the next few days.

The first initiative launching atop the platform will be Alpha Hubs: token-gated dashboards curated by top analysts and figures in the space.

Rather than merely being a paid group chat (RIP SocialFi), alpha hubs enable creators to curate specific dashboards that reflect their research and trading styles, granting token holders access to key insights.

Dashboards will run in two modes:

- Free: Basic dashboards, no $SERV required;

- Pro: Access using $SERV with balance decreasing daily.

In pro mode, every $SERV spent is divided as follows: 50% is allocated to the creator, 30% is directed to the treasury for growth and rewards, and 20% is permanently burned, resulting in a consistent supply sink for $SERV.

After the launch, the strategy for 2025 is clear: enhance dash.fun. This includes tailored dashboards for memecoins, AI, and other sectors, portfolio and liquidity management, public access to tokenization (beyond KOLs), and the rollout of a drag-and-drop builder, which will allow anyone to build, publish, and tokenize their own dashboards.

Beyond dash.fun, OpenServ's vision extends well beyond its current form.

The company aims to redefine the concept of AI agents, transitioning from single-task performers to dynamic entities capable of real-world collaboration and reasoning.

By streamlining the path from idea to deployment, OpenServ empowers builders to accelerate agent adoption across web2, web3, and beyond.

As the agentic era unfolds, OpenServ aims to be the backbone of a global ecosystem where autonomous systems deliver meaningful impact across industries.

Conclusion

While reviewing OpenServ’s whitepaper, I was impressed by its depth and thoroughness. Each design decision for the core technology and the no-code builders is carefully executed, grounded in best practices and cutting-edge research.

As major financial players such as Visa, Mastercard, and Tether develop their own agentic solutions, many individuals are just beginning to understand the significance of multi-agent systems, let alone a cohesive interoperability layer for these systems.

Given that OpenServ has been pursuing this vision for more than a year and has a functioning product, it's safe to say that OpenServ is leading the way in this narrative. And the numbers speak for themselves.

Agents' potential applications span a wide range of fields, including gaming, science and research, financial markets, and government operations.

With a bit of contemplation, it becomes clear that the majority of processes can be automated using an agent, or even better, a collective of agents that collaborate harmoniously.

The more I explore this topic, the clearer it becomes that those who do not embrace AI risk being left behind.

Thanks to the OpenServ team for unlocking this article. All of our research and references are based on public information available in documents, etc., and are presented by blocmates for constructive discussion and analysis. To read more about our editorial policy and disclosures at blocmates, head here.

.webp)

.webp)

.webp)

%20(1).webp)

%202.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)