(In Lady Whistledown’s voice) Dearest Gentle Reader,

Right now, in H2 2025, it is undeniable that about 90% of daily internet users have either encountered AI, used some form of it, or are in the category of those highly dependent on it on a daily basis.

The growth has been phenomenal, and acceleration is occurring faster than any technology that has ever been.

However, not everyone who has used or uses AI is familiar with its sophisticated categorization. When it comes to artificial intelligence, the most popular models don't necessarily translate to the best model.

AI can be categorized in many ways: by its capabilities, where AI could be narrow, general, or super intelligent, or even further down this category, where AI could be characterized as reactive machines, limited memory, theory of mind, or, the scary part… self-aware.

AI can also be categorized into learning paradigms, differentiated as supervised, unsupervised, reinforcement, and other variant learning paradigms.

Finally, we can also categorize AI by architecture, focusing on algorithmic structures such as traditional machine learning models, neural-network-based models, generative models, large-language models, and multimodal models.

This sets the tone for how we can describe the model on our palate today, Kimi.ai by Moonshot.

What is Kimi.ai?

Kimi.ai is the brainchild of the Chinese AI company, Moonshot.

Kimi is a 32-billion-activated-parameter (1 trillion total parameter) mixture of experts (MoE) language, multimodal AI model capable of handling complex tasks in seconds.

By multimodal, this means that Kimi AI can understand more than text, allowing users to prompt images, files, codes, and other types of inputs, pretty much like ChatGPT and Grok.

By mixture of experts (MoE), we mean that Kimi responds to prompts utilizing parameters based on their complexity rather than using all of its parameters at once, for every prompt.

There are a few features that set Kimi apart.

For one, most AI chatbots lose the plot if you give them too much info at once. Kimi doesn’t. Where ChatGPT can handle, say, a handful of pages, Kimi can chew through massive documents like entire books, reports, or even a gigantic codebase.

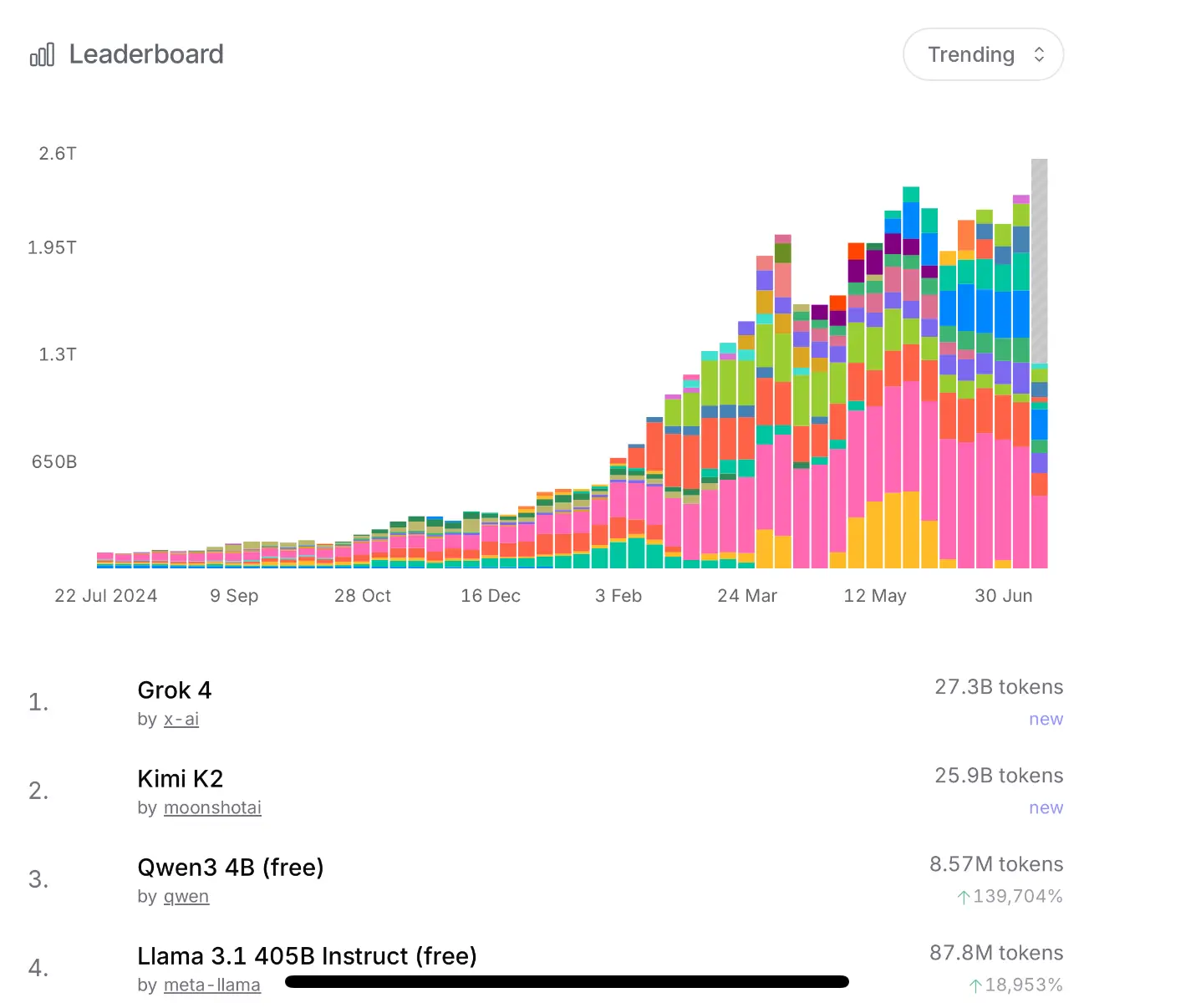

In a situation where light inputs are fed to other multimodal models, Kimi can handle pretty large files, making it one of the most efficient models out there. At the moment, Kimi’s K2 model sits at number 2 on the leaderboard rankings for trending LLM models.

This makes Kimi suitable for more arduous work when compared to other models out there.

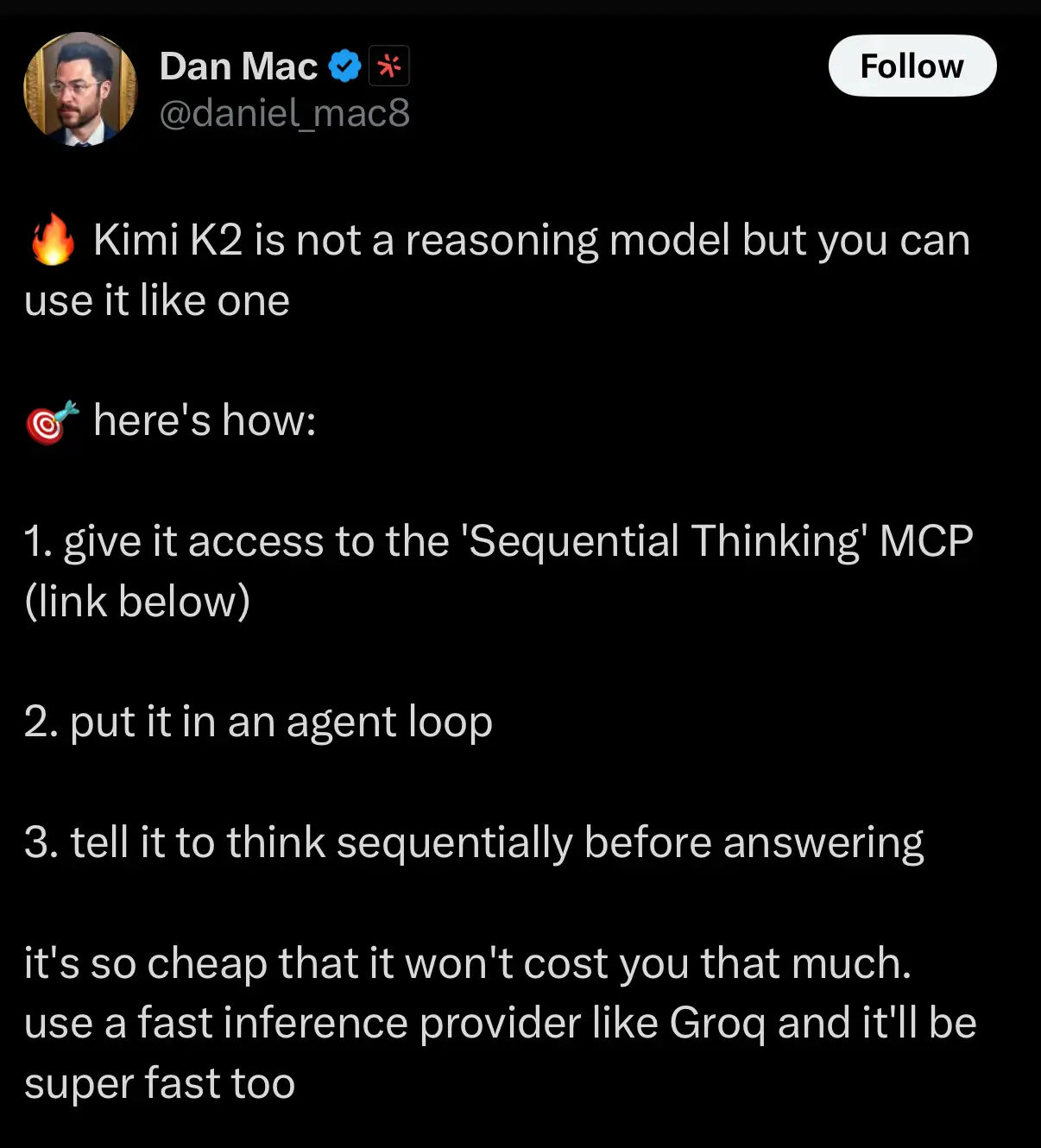

Where it also gets interesting is that unlike the GPT or Grok series, Kimi isn’t a reasoning model. Reasoning models are usually trained to follow multi-step logic, solve maths or coding problems in stages, handle abstract thinking and planning, and break down tasks into subtasks pretty much like agents.

However, when it comes to Kimi, it’s not trained or designed to function as a “reasoning-first” model.

Rather, Kimi’s design leans more toward information retrieval (finding stuff, summarizing, searching), document analysis (handling long files, summarizing content), and multimodal inputs (images, PDFs, code, etc).

It can handle logical tasks (math, coding, step-by-step thinking), but its strength isn’t complex reasoning chains, problem-solving, or advanced logic puzzles like models built with “reasoning-first” architecture such as ChatGPT 4.1/o1, Anthropic’s Claude 3.5 sonnet, Gemini 1.5 pro, and even homeland competition, DeepSeek-v2.

In its newest version (Kimi K2), users are already finding out ways to make the model function as a reasoning model too, using the sequential thinking model context protocol.

Another area where Kimi stands out is that it’s an open source model with capabilities that are miles ahead of some of its competitors which are paywalled such as ChatGPT, Claude, and Gemini which all charge subscription fees for full access, while open-source models like Meta’s LLaMA 3 or Mistral demand self-hosting or third-party platforms making them impractical for most non-technical users.

This makes it available for commercial and research use without restrictive terms, giving developers, companies, and independent users access to a high-performance language model with no strings attached. Open releases of this scale are rare, especially from companies capable of building competitive models at this level.

By combining extreme long-context capability with open access, Kimi K2 shifts the dynamic in a market where most high-performing models remain closed or tightly controlled. It offers a practical tool for those needing to process large volumes of information or integrate advanced language models into products, without the typical barriers of cost, licensing, or platform dependence.

Kimi AI takes a different approach. It offers key features like long-document reading, real-time web search, and multimodal input without charging users or forcing them into a technical setup. Kimi K2, is fully open source, meaning anyone can use or build on it freely, including businesses.

How Kimi K2 changes the game

Kimi K2 stands out by pushing the limits on context length and open access. It’s built with a 2 million token context window, meaning it can process and work with massive amounts of information at once, far beyond what most mainstream models offer.

For reference, that’s enough to handle entire books, long legal documents, research papers, or large datasets in a single prompt. While many AI models struggle with anything beyond a few hundred pages, Kimi K2 is designed to take it all in and deliver meaningful output without losing track. Insane, innit?

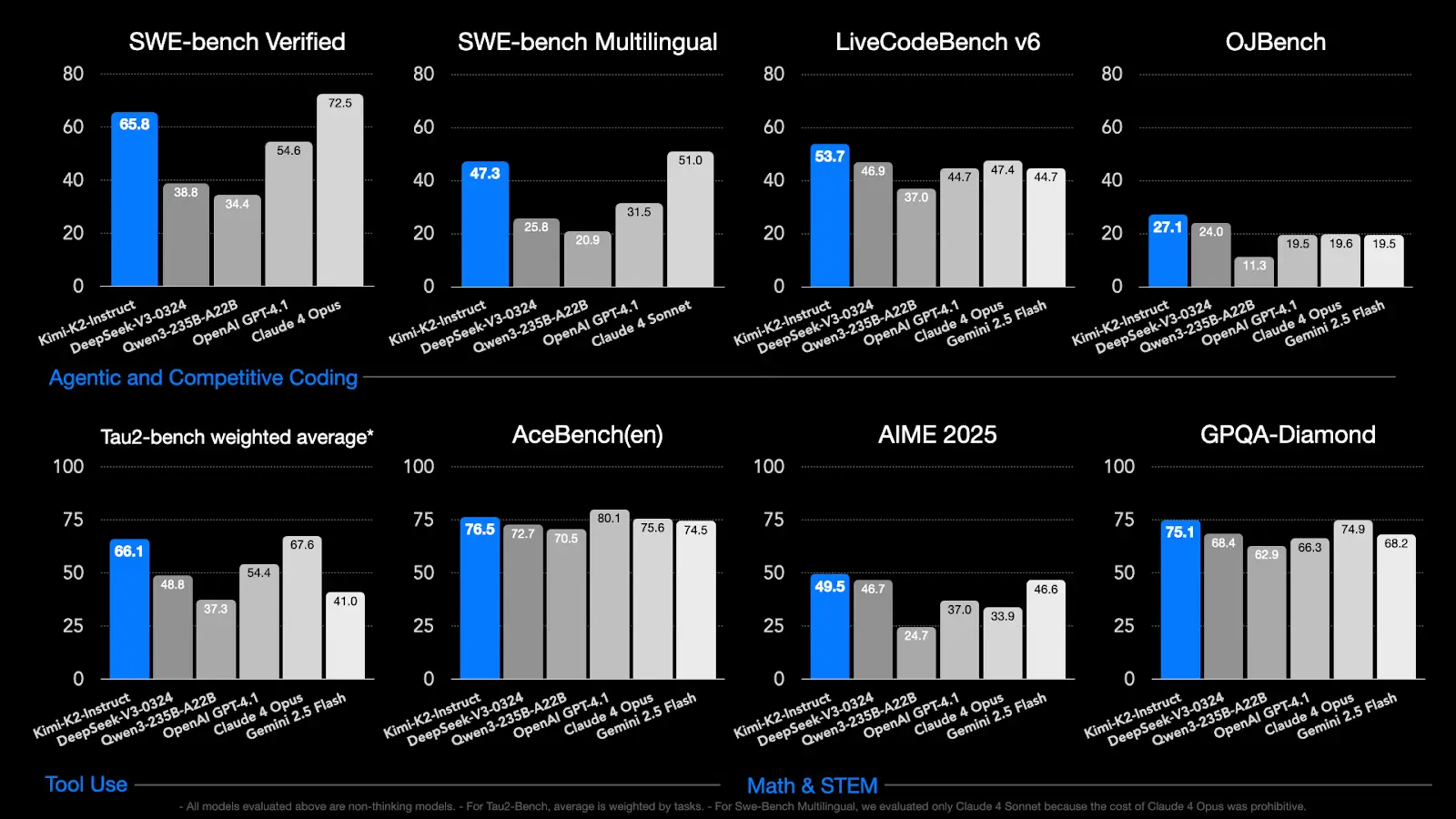

Here’s how it fares in comparison to other competitors:

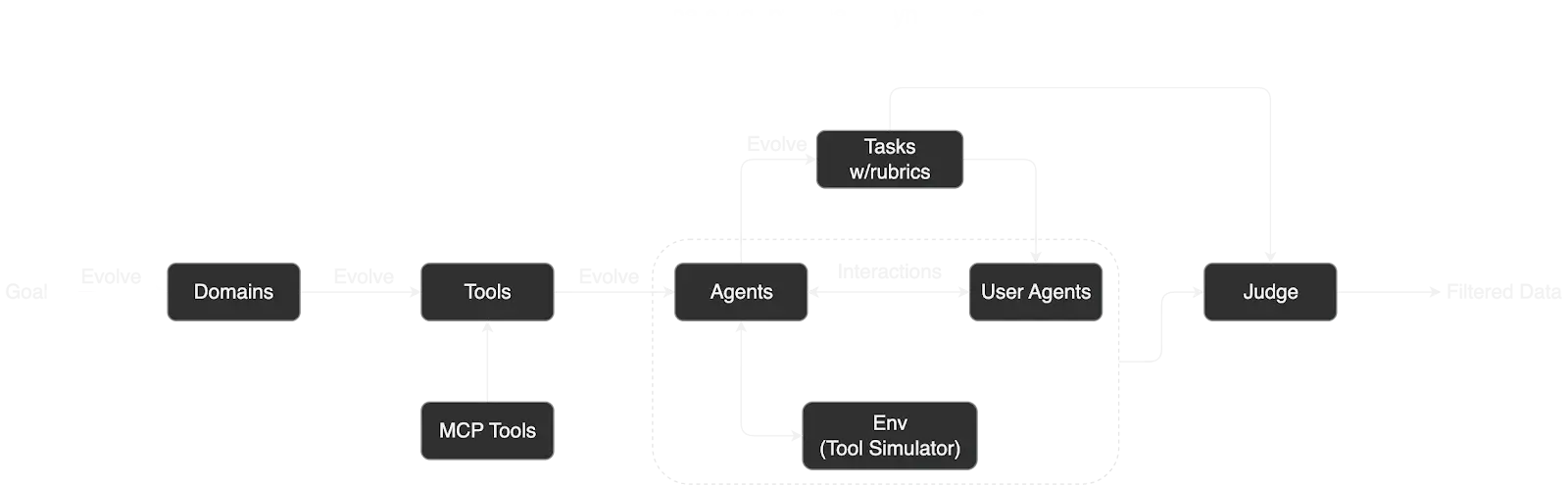

Kimi K2 can perform both research functions and agentic capabilities, with the latter as a result of the development of a pipeline that simulates real-world tool usage scenarios and then generates agents with diverse tool agents.

What this ideally means is that while you can load in some 200 page research paper and get Kimi K2 to break it down in minutes to a few seconds, it can also perform tasks like booking a flight to the US to watch Chelsea lift the club World Cup, or maybe book a date for your sneaky link while your wife is on vacation at her parents (you cheat!)

Kimi K2 also continuously evaluates itself to ensure optimal performance by these subagents that perform goal-based tasks.

Additionally, Kimi K2 also utilizes reinforcement learning to provide feedback for non-reward-based tasks like basic prompts such as “create a thread on X about the pains of vibe-coding.” Such prompts differ from goal-based tasks like the examples given above, and as such require the use of a self-judging mechanism whereby the model acts as its own critic in order to provide optimal response.

Getting started with Kimi

Kimi is available for use on web and as an app that you can download from the AppStore. Users can also build agents using the Kimi API.

Additionally, improvements such as the MCP features for Kimi on web and app will be made available soon.

To run Kimi locally, ensure your system meets the requirements.

Kimi K2 favours Linux OS (preferred), high-end hardware like a GPU with at least 24GB VRAM and 256GB RAM for quantized versions, and Python 3.10+.

Proceed to install dependencies such as huggingface_hub and hf_transfer via pip.

Download the quantized Instruct variant from Hugging Face using code like:

`snapshot_download(repo_id="unsloth/Kimi-K2-Instruct-GGUF", local_dir="Kimi-K2-Instruct-GGUF", allow_patterns=["*UD-TQ1_0*"])`,

which is about 245GB and suitable for consumer hardware.

Once downloaded, set up an inference engine like llama.cpp for efficient runs, then proceed to clone the repo, build it with CUDA support, and execute with commands such as:

`./main -m path/to/model.gguf --prompt "Hello, Kimi!"`.

For faster performance on powerful setups, use vLLM by installing it via pip and running a server with

`python -m vllm.entrypoints.openai.api_server --model moonshotai/Kimi-K2-Instruct`.

Interact via an OpenAI-compatible client for chat or tool-calling tasks, adjusting parameters like temperature for balanced outputs. If you do not have adequate hardware, consider trying cloud options.

Concluding thoughts

The race to AGI is becoming increasingly feisty and unpredictable. For perspective, between the time I started writing this article and getting to the conclusion, OpenAI released a new agent model.

However, considering that a few months ago, Moonshot was a largely unknown player in the race to AGI, and now it is releasing models with capabilities that blow so many other models out of the water, it is something to cheer for.

What’s even more interesting is that at the moment, Kimi K2 is the most powerful open-weight non-reasoning model out there, making it a serious challenger against other pay-walled models.

The Moonshot team have themselves stated that they’re not done yet, there’s still a lot of work to be done and Kimi K2 is not nearly as perfect as it should be.

For example, it still has limitations, such as generating excessive tokens when confronted with complex reasoning tasks and a decline in performance while dealing with certain tasks that require enabling tools.

There’s still a long way to go, but all the signs point to a really good future for whatever is coming next for Kimi by Moonshot.

.webp)

.webp)

.webp)

%20(1).webp)

%202.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)